Shapes of AI

AI is searching for new physical bodies - is it enough to justify a special place in users' pockets?

Humans looking at screens, touching some of them, pushing buttons, and pushing the mouse around are the main interaction patterns between humanity and technology these days. The form factors are limited mainly to screens of various sizes.

Honey, welcome home. Did you have a good day looking at screens at work?

Yes, screes were good today. How about your screens?

Mine were not so good. Let's watch something on our big screen.

Ok, I might touch my small screen while watching.

Looking at various screens is familiar and comfortable for most people. And since humans are creatures of habit, it's hard to convince them to start using something completely new. Many products tried, but only a few succeeded.

The screen-first interaction pattern is strongly connected with another one: application-first. There's an app for that is Apple's famous slogan, which represents the fragmentation of use cases between separate applications. Creating spreadsheet? Editing photo? Searching for a recipe? Following a football game? Watching videos? Posting to social media? Any other imaginable task, including tracking of those tasks? There's an app for that.

Another category of devices, currently in the minority, is voice-first products. Typically, they are home or personal assistants like Alexa or Siri. They are ditching the screen-first and application-first approach. They behave like an interface for interacting with the apps on users' behalf. The device decides for the user, selects one or more apps to complete the task in the background, and only presents the results. For example, Siri starting a timer app or Alexa putting stuff in your Amazon basket. It's not entirely breaking news - people have been shouting at Siri or Alexa to turn on their lights at home for years now. But up until now, there were very few such usable use cases. To put it bluntly, Siri or Alexa is quite dumb and cannot understand anything but precisely phrased questions and requests and complete just the simplest tasks.

However, they are a small but essential step in a significant new direction - towards seamless integrations and unified experience, away from the siloed apps we use today. But only with the recent advent of generative AI the search for new interaction patterns really got off. Powered by the recent advancements in generative AI, it allows for much more robust, usable, and exciting interactions. Large language models, the backbone of today's AI tools, can understand so much more than Siri or Alexa of the past. We can design products built around truly functional AI for the first time in history. Just imagine a digital personal assistant that is actually useful and that can do things.

All these possibilities lead several companies to pursue new hardware form factors; to search for new bodies for the recently born generative AI. In the realm of AI-driven devices aiming to offer alternatives or complements to smartphones, the Rabbit R1 and the Humane AI Pin stand out for their innovative approaches to digital interaction. These devices are pioneering in integrating AI to simplify and enhance user experiences, each with its own set of features and functionalities that distinguish them from traditional smartphones and each other.

Humane AI Pin

AI Pin by Humane made headlines long before the official unveiling or shipping of a single unit to customers. It has generated considerable interest, especially among tech enthusiasts looking for the next big thing in personal AI devices. AI Pin is a screen-less (well, not entirely), square-shaped standalone wearable device that attaches to the wearer's clothing with a magnet. Equipped with a camera and various sensors, the AI Pin can scan the surrounding environment.

Designed as a digital personal assistant, it offers a range of functionalities through voice commands like reading messages, replying to them, or searching the web. With its camera, it can also see the surrounding environment and describe it back - e.g., recognizing the fridge's contents and suggesting to the user what she might cook for dinner. It also uses a laser projector to create a user interface on the user's palm, navigable through gestures and voice commands - although this feature seems to be very finicky, even on promo videos.

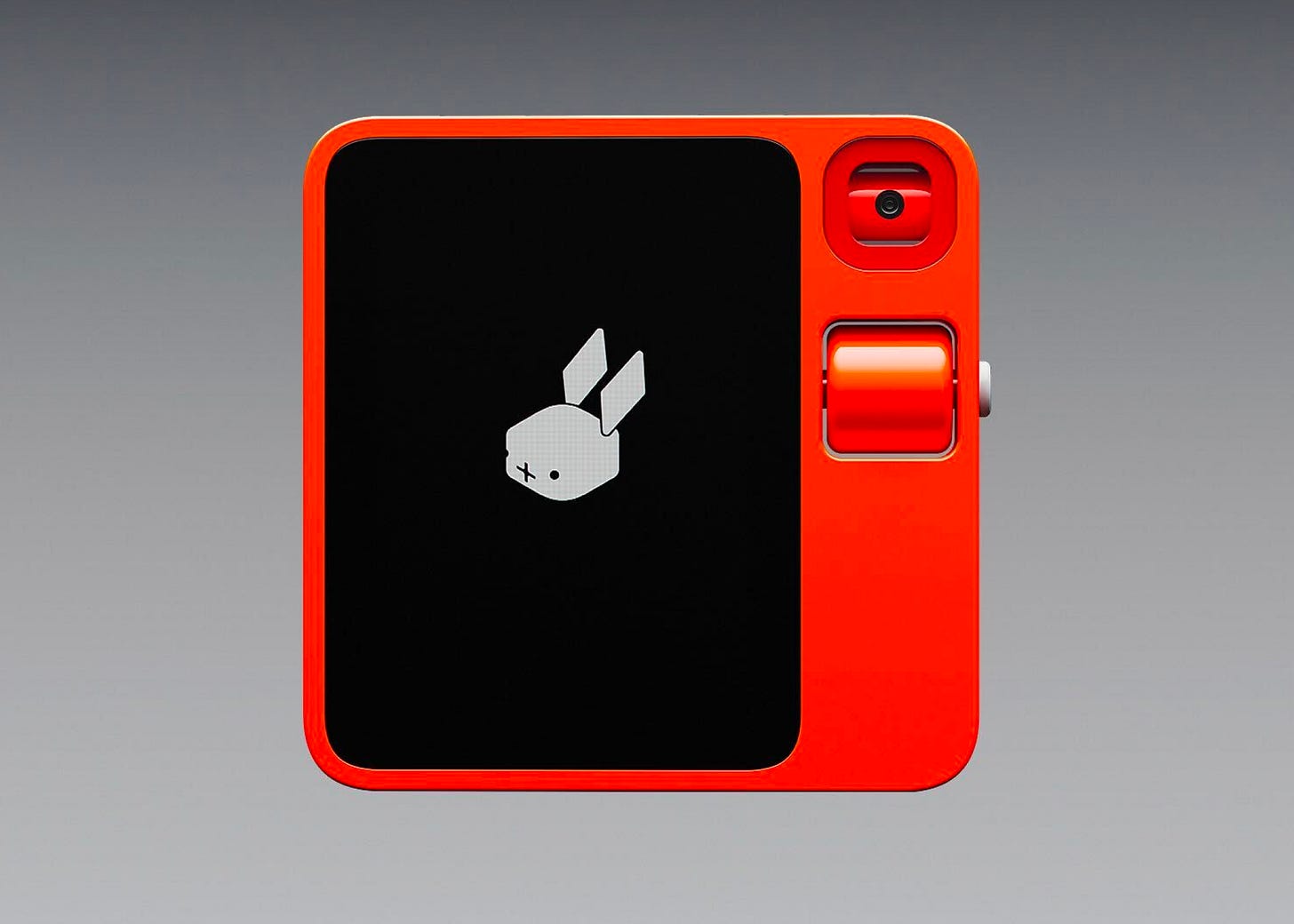

Rabbit R1

Tiny orange box Rabbit R1 is not a phone. But also not not a phone. The best description is probably a handheld device that can control your apps for you. My first thought when I saw this device for the first time was Couldn't this be an app? It just feels like Siri if she would be any good.

The hardware of R1 looks excellent. With its small rectangular body, roughly the size of half of the iPhone, bright orange color, 2.88-inch touchscreen, and cool physical gimmicks like a scroll wheel or rotating camera, it is something you just want to hold in your hand. The Teenage Engineering partnership is clearly visible here. So far, so good.

R1 runs on Rabbit OS, powered by a "Large Action Model" (LAM) designed to act as a universal controller for apps, aiming to streamline interactions between the user and various digital services. LAM is supposed to deliver on the promise of actually being a helpful personal assistant by learning how to operate any app and then doing it for the user. This is the most promising part of the whole Rabbit story. ChatGPT and other LLMs can generate texts or images, but R1 can actually do things because of its ability to learn the various apps.

Still destined to fail?

The emergence of devices like the Rabbit R1 and Humane AI Pin reflects a growing desire for smartphone alternatives that demand less of our time and attention while still providing powerful and intuitive digital interactions. Both devices are advertised as universal controllers for various apps or even smartphone replacements. The problem is that a pretty capable controller of various apps is already in almost every person's pocket - their smartphone. And for some use cases, like checking your account balance, you want more privacy than a loudspeaker clipped to your jacket.

Still, both devices bravely push the current boundaries and show what might be possible in the future. And I believe there's a much larger potential for smartphones to integrate AI more powerfully within their existing operating system - and then the leap towards a more universal app controller will be just an update away - not in a new device.